Project

HappyPath AI

My Role

Co-Founder, Head of Product & Design

Category

Startup

Year

2024-2025

Summary

Conceptualized and built a product guidance agent for B2B SaaS

- Overview

- The Problem

- The Vision: HappyPath AI

- The Process

- v0: Automated Workflows

- v1: Intent based guidance

- Final: Product Guidance AI Agent

- First Time User Experience

- 1. Installing HappyPath

- 2. Adding Context (Docs, etc)

- 3. Adding Workflows

- 4. Customizing Widget

- Ongoing User Experience

- 1. Insights, Conversations & Users

- 2. AI Studio

- Widget Design

- Design System

- Running a Beta Program

Overview

HappyPath AI is an adaptive, AI-native product guidance agent designed to reduce time-to-value in complex B2B SaaS tools. The idea originated from our experience in streamlining the UX for complex B2B SaaS, which was still largely unsolved. Users were still getting lost in complex workflows despite beautiful UIs, onboarding tours, and help centers.

I started HappyPath with some classmates from CMU. As the Head of Product & Design, I conducted early research, created design concepts, and prototyped the first version. We shipped the v1 product, ran a beta program with 8 users and secured them as design partners.

The Problem

B2B SaaS tools are powerful but notoriously difficult to navigate. Users face friction due to feature overload, divergent workflows, and generic onboarding.

Despite investments in walkthrough tools (e.g. Pendo), product tours, help centers, and redesigns, users continue to struggle with:

- Figuring out where to start

- Knowing how to reach value quickly

- Understanding what actions matter in their context

The Vision: HappyPath AI

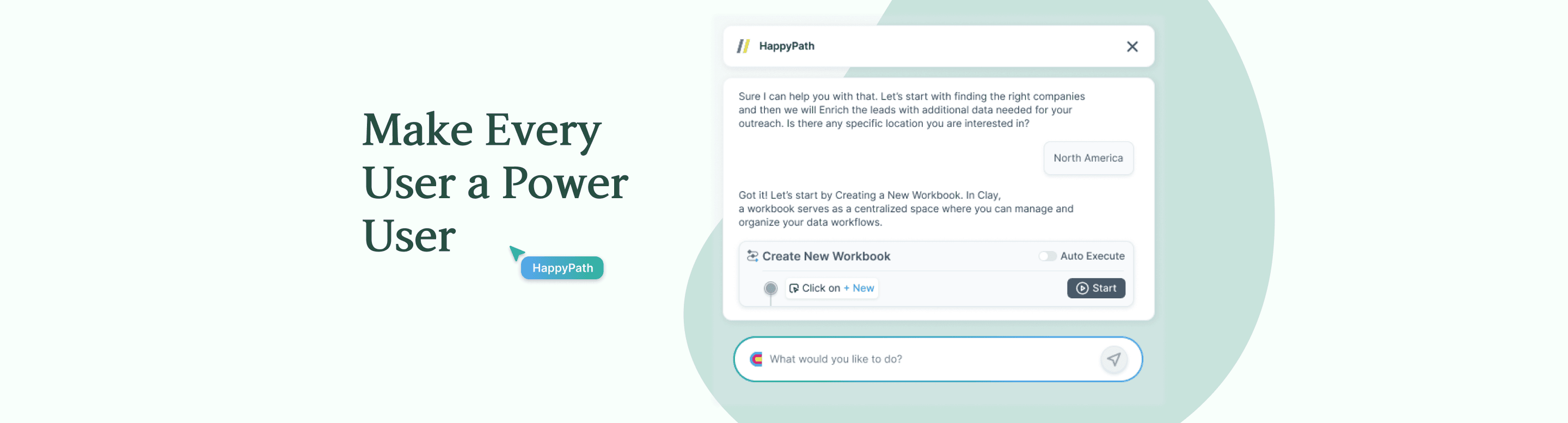

HappyPath is an AI-native product guidance agent that adapts to what each user is trying to do, right inside the product. Rather than a one-size-fits-all tutorial, it delivers context-aware support, personalized workflows, and just-in-time nudges. Our goal was help users get unstuck without leaving the UI.

Demo Video

This short demo showcases HappyPath in action with Clay.com. It highlights the adaptive nature of the system and how it enhances user onboarding and productivity without relying on rigid walkthroughs or hardcoded flows.

What it does:

- Understands context: Pulls from documentation, past support tickets, usage logs.

- Gathers user intent: Converses with users to understand their goal.

- Watches the UI live: Uses a lightweight co-browsing layer to follow user actions.

- Generates in-context guidance: Offers dynamic hints or flows relevant to what the user is trying to do.

- Offers automation of workflows: Allows users to "auto-execute" workflows to save time and effort.

- Adapts to user feedback: Unlike static guides, the user can provide feedback and shape the guidance to be more relevant to them.

- Learns over time: Improves based on behavioral patterns and success rates

The Process

1. Problem Validation

We began by going deep into the real-world onboarding and adoption challenges facing SaaS teams. I conducted over 80 qualitative interviews with product managers, CS leaders, growth marketers, and designers across various industries and company sizes.

These conversations revealed a persistent pattern: despite investments in onboarding tools and redesigns, users were still getting stuck. They didn’t just need instructions, they needed intelligent guidance that aligned with their goals and context.

Some key findings:

- Teams were investing in onboarding tools and help docs, but users still struggled.

- Tools like Pendo and WalkMe were task-focused, not outcome-focused.

- Teams lacked visibility into whether users actually understood why they were doing something—not just how to do it.

- Maintenance was a major burden: scripted tours broke with every UI update.

The problem was very was clear: guidance needed to be contextual, adaptive, and tailored to user intent.

2. Defining the Ideal Customer Profile (ICP)

Synthesizing user interviews and market analysis to define a clear Ideal Customer Profile, we looked for companies and personas where the pain was most acute. This turned out to be mid to growth stage B2B SaaS businesses with:

- High level of feature complexity

- Self-serve onboarding or freemium funnels

- High support volume during onboarding

- PLG motions with product teams that valued data-driven decisions

The ICP definition helped us prioritize features, messaging, and integration strategies from the start.

| Industry | B2B SaaS - CRM, BI & Analytics, Monitoring Tools |

|---|---|

| High TTV (4-12 months) lowers adoption rate which impacts revenue | |

| High CAC has an impact on revenue. Companies are moving towards a PLG approach |

| Geography | North America has the highest market (35%-45%) for B2B SaaS companies. |

|---|---|

| *APAC is a growing at a faster rate than any other region. |

| Revenue | Scale Ups ($1-10 million ARR) |

|---|---|

| Don’t have extensive engineering resources to build it in house | |

| Need to apply a PLG approach to scale customers | |

| AI will become a significant part of the budget. |

| Employees | > 20-30 employees growing higher than 20% per year |

|---|---|

| < 1000 employees | |

| Product Mgt Team at this size is between 5 - 15 people. Have a decent budget for R&D. |

| Key Challenges | Company is scaling and product is not self serve - hence dependent on growing account service teams to acquire more users. |

|---|---|

| Users have a high TTV, can cause low adoption and churn | |

| Product has a lot of feature capability making it difficult for users to configure and use. | |

| Software is bloated - not easy to simplify workflows and add & maintain new features. |

| Value Prop | Makes the product self serve, reduces time to value - leads to quicker scaling and lower churn |

|---|---|

| Improves user experience by giving access to information quickly, making it more likely for users to renew | |

| Faster to integrate this than build and maintain it in the existing codebase. |

3. Conducting Market Research

To ensure we were solving a real and scalable problem, I conducted a detailed competitive analysis alongside a top-down market sizing exercise. I benchmarked existing digital adoption tools such as Pendo, WalkMe, Appcues, etc.

- Most relied on hardcoded steps. There was no concept of adaptive flows based on real-time user behavior

- None pulled in dynamic context from documentation or real-time usage.

This gave an opportunity space: an AI-native assistant that learned, adapted, and explained without the effort and rigidity of traditional onboarding tools.

Parallel to that, I researched the broader market landscape—sizing. By combining behavioral intelligence, documentation parsing, and in-product context, we could deliver a differentiated solution that was faster to deploy and more effective than existing tools.

4. Conceptualizing and Building

Once there was a clear grasp of the problem and opportunity, we entered a phase of rapid experimentation. We worked with hypothesis-driven design sprints: developing mockups or lightweight prototypes to test the concept and different interaction models. Our goal wasn’t to perfect the solution right away, but to learn quickly and validate what resonated.

Rapid Experiment Loop

We explored various versions which were tested with target users. Each round of feedback helped us refine not just the UI, but the core behaviors and triggers of the system.

At each stage, we prioritized learning: What worked? What confused users? Where did they hesitate? These insights directly informed what we built next.

As a result, we were able to adopt a lean, iterative process that moved us from concept to real usage quickly.

v0: Automated Workflows

The initial version of HappyPath started with a bold idea: what if users could simply describe what they wanted to do, and the product would do it for them?

At the time, agent-based automation was gaining traction, so we designed and tested mockups of a copilot-style assistant embedded within a SaaS product. The concept was simple, users would type a goal in natural language (e.g., “create a quarterly revenue dashboard” or “set up a new campaign”), and the system would automatically perform the necessary steps inside the UI. To build this, we explored integrations with open-source agents. We spoke with the team at LaVague, a community-led automation project, and experimented with using their browser automation agent to drive end-to-end workflows.

We tested this concept using sandbox environments inside Odoo and Tableau.

Demo

What we built:

- Mockups and scripted prototypes showing a user typing their goal and watching it auto-complete.

- Integration tests with LaVague to automate live UI actions.

- Chrome extension hooks to simulate embedded assistance.

What we observed:

- The agent could complete simple, well-structured tasks (e.g., navigating menus, setting filters).

- It struggled with multi-step, branching workflows that required decisions or user input.

- The experience felt magical when it worked, but:

- Latency was high (long wait times to complete a task).

- Cost per execution was non-trivial.

- Success rate dropped sharply as complexity increased.

Gathering Feedback

We ran concept testing sessions with design partners from Observe AI, Confluent, Atlassian, AWS, and several smaller SaaS companies.

Key Findings

- Teams were excited by the automation concept, but wanted more control and transparency.

- Users didn’t want a chatbot-style experience (“not another Intercom widget”). They preferred something that felt native and embedded in the product UI.

- Nudging users at moments of friction (e.g., repeated failed attempts, time spent on a screen) felt more valuable than proactive instruction.

This round of testing helped us reframe the product: not as a copilot that replaces the user, but as a smart assistant that supports them at the right moments.

v1: Intent based guidance

In response to earlier feedback, we evolved HappyPath into a hybrid model that offered both intelligent guidance and optional automation. Users could be guided step-by-step based on what they were doing, with the option to automate the entire sequence if desired.

This version introduced a second cursor to point at relevant UI elements in real time. The system interpreted the user’s goal, matched it with their current screen context, and nudged them through the process—almost like having a product expert walking them through the interface.

Building Proof of Concept

We had been hearing variations of the same sentiment from potential users: “If this actually works… it would be amazing.” So this version focused not just on improving UX, but also on testing the underlying technology’s reliability across different SaaS environments. I prototyped using Cursor and Cline as codegen assistants.

What we built:

- A working Chrome extension that:

- Observed user actions and screen state

- Surfaced step-by-step guidance relevant to current context

- Offered optional “auto-complete” functionality for multi-step tasks

- Integrated basic memory: the system could track the user’s previous set of steps and suggest what should come next

- Prototyped and tested directly inside real tools like sales enablement tools, analytics platforms, and form-based SaaS apps

What we observed:

- The technology worked reasonably well. Early tests showed the system could reliably anchor to buttons, input fields, and dropdowns across many common web stacks

- The widget was able to offer useful guidance through the context provided by the knowledgebase articles

- Users could provide feedback and change the instruction provided by HappyPath, giving them more flexibility than static tours.

Gathering Feedback

Key Findings

- Strong demand for non-static guidance

- HappyPath was seen as more proactive and actionable compared to in-house AI chatbots.

- The widget took up too much real estate which was a problem specially for B2B SaaS

- One standout insight: “This is cool, but it still feels like I’m being handed steps. What if the system just knew what I needed next?”

That became a pivotal learning. Users didn’t want generic instructions. They wanted highly adaptive, real-time suggestions that responded to what they’d just done. This insight directly informed our next iteration, which focused on generating next steps dynamically based on prior user actions, making the experience feel truly personalized.

Final: Product Guidance AI Agent

Next, we focused on building a more robust, production-ready version of HappyPath, one that could be reliably tested inside real SaaS environments.

What we built

UX Changes

- Redesigned the interface from a static side panel to a movable widget anchored to the bottom of the screen.

- Introduced two user modes:

- Conversation Mode: A chat-like interface where users can express intent, clarify instructions, give feedback, or modify steps.

- Workflow Mode: The widget compresses and shifts focus to a cursor-driven overlay that actively guides the user step-by-step through the UI.

Technical Changes

- Built two deployment modes:

- Chrome extension for demo and internal testing.

- Embeddable JavaScript snippet for integrating HappyPath directly into partner environments without installation overhead.

- Had to find a workaround to avoid using browser automation stacks like Playwright or Puppeteer, which required installation from the user.

- Implemented a screenshot-based feedback loop, capturing UI state and user actions at every step. This allowed us to understand where users were in the workflow and determine the best next step dynamically.

First Time User Experience

1. Installing HappyPath

Home Screen Empty State with Onboarding steps

User can copy and the JS snippet

User gets confirmation once installed

Onboarding Status is updated

2. Adding Context (Docs, etc)

Empty State for adding documentation

User can add external & internal documentation as context

User can view, add or modify the context for the agent

User can see a strucuted map of how agent will provide guidance

3. Adding Workflows

User can add structured workflows to HappyPath

They simply need to record the steps in the UI

User can review, add or modify the steps in the workflow

User can view all the added workflows in a dashboard

4. Customizing Widget

User can customize the HappyPath widget to match their UI

Ongoing User Experience

1. Insights, Conversations & Users

User can view insights about the widget performance, workflows, top use cases and painpoints

They can also segment the end users by cohorts, role, etc. and get targeted insights on their specific problem areas

They can also review the drop-off points in the user journey and understand their use cases

User drill down to specific conversations and see where their end-users are facing issues

User can view insights about the widget performance, workflows, top use cases and painpoints

They can also segment the end users by cohorts, role, etc. and get targeted insights on their specific problem areas

They can also review the drop-off points in the user journey and understand their use cases

User drill down to specific conversations and see where their end-users are facing issues

2. AI Studio

Users can customize prompts by persona, underlying model configuration, etc.

Users can create a multi-step prompts to guide the agent behavior.

Based on where the end-users are getting stuck, HappyPath provides UI feedback along with suggested fixes. PMs can review the sources and create tickets.

HappyPath also recognizes the difference in the UI and outdated documentation, providing a list of fixes that the user can review and directly update.

Widget Design

Conversation Mode

The customized widget will show up in the users product interface. This wigdet starts with a conversational interface where end-users of the product can express their use case , clarify their requirements and get an explanation of features and workflows. They can switch back and forth from the Workflow mode to provide feedback and change the navigational flow.

Workflow Mode

To optimize for real eastate, the widget compresses into a compact version while navigating the user through the UI. It also spins a second cursor that points at the UI elements. The end-users have an option to auto execute the entite workflow if they do not want to go through each step manually.

Design System

Basic Styes (built on top of next.js)

Base Components

Page Layout

Running a Beta Program

With a stable, testable version of HappyPath ready, we launched a private beta to evaluate the product in real SaaS environments. We focused our outreach on product and growth teams with complex onboarding challenges, limited engineering bandwidth, and a strong interest in PLG (product-led growth).

We framed the pitch around a simple question:"What if your product could explain itself?"

How we approached it

- Created a landing page with a concise narrative and demo video, and a Beta sign up form.

- Used LinkedIn outreach, warm intros, and 1:1 product walkthroughs to recruit beta users.

- Recruited 8 teams across SaaS domains like DevOps, analytics, compliance, and developer tools.

- Helped them onboard using our lightweight JavaScript embed or Chrome extension.

- Ran structured calls where we observed live usage, guided setup, and gathered feedback in real time

What we tracked and learned

- User behavior: We closely monitored how users moved through workflows, where they got stuck, and whether guidance appeared at the right time.

- Hint accuracy: We measured the relevance and usefulness of each suggestion, using both qualitative comments and behavioral indicators (completion rate, dismissals, hesitations).

- Edge cases: We captured breakpoints where UI drift, unexpected layouts, or embedded widgets interfered with guidance accuracy.

- Feedback channels: We established a tight feedback loop. Users could give feedback in Discord, and we used that to inform weekly design + engineering sprints